Stephan Fabel

on 25 July 2019

The 10 new rules of open source infrastructure

Recently, I gave a keynote at the Cloud Native / OpenStack Days in Tokyo titled “the ten new rules of open source infrastructure”. It was well received and folks pointed out on Twitter that they would like to see more detail around those ten rules. Others seemed to benefit from clarifying commentary. I’ve attempted to summarize the points I’ve made during the talk here, and happy to have a conversation or add more rules based on your observations in this space over the last ten years. I strongly believe there are some lasting concepts and axioms that are true in infrastructure IT, and documenting some of them is important to guide decisions that go into the next generation thinking as we evolve in this space.

1 Consume unmodified upstream.

The time for vendors to proclaim that they are able to somehow make open source projects “enterprise-ready” by releasing a “hardened” version of infrastructure software based on those upstream projects is over. OpenStack has been stable for multiple releases now and capable of addressing even the most advanced use cases and workloads without any vendor interference at all. See CERN. See AT&T.

I believe this is the most important rule of them all because it is self-limiting to not follow it: why would you restrict the number of people able to work on, support and innovate with your platform in production by introducing downstream patches? The whole point of open infrastructure is to be able to engage with the larger community for support and to create a common basis for hiring, training and innovating on your next-generation infrastructure platform.

2 Infrastructure-is-a-Product.

When implementing the next generation infrastructure, it is important to remember that you are essentially entering a market with competing alternatives for your constituency. In almost all cases that is a public cloud alternative, but even legacy stacks based on older technologies such as VMware can pose a significant risk, especially if your workload adoption is slow. Outreach, engagement with developers, actively working on migrating workloads on to the new platform is critical to reaching a lower cost per computing unit then alternative platforms. The sooner that point is reached, the better.

Infrastructure should also not be treated as something “hand-crafted”. Large scale implementations are without exception based on standardization of components and simplicity in architecture and parameter tuning. Limiting the ability to transfer knowledge of existing clusters to new teams, losing the ability to train new staff, or recover from the “expert departure” in your team is key and the only way to achieve this is by avoiding customized reference architectures introducing technical debt into your infrastructure.

3 Automate for Day 1826.

Almost all teams have not automated to the degree they ought to, and most of them realize this at some level but fail to do something about it. Part of the reason why certain tools have become popular with operators is that they address the first 80% of all automation use cases quite nicely but do so at the cost of being able to reasonably address the rest. The result is that lifecycle management events such as upgrades, canarying, expansion and so on remain complicated and fail to reduce the amount of energy that event consumes. A simple test is this: if you still have to SSH into a server to perform some task you simply have not automated enough. Any and all events concerning that machine should be addressable via API and through comprehensive automation and orchestration setup.

When choosing your orchestration automation, assume that the technology stack will change over the course of your hardware amortization period (typically five years). Your VMware of today might be an OpenStack of tomorrow, might turn into a Kubernetes cluster on top, right next to it on bare metal, or even be replaced by it. You just don’t know. Once the “Kubernetes of serverless” crystallizes, you will have to automate the deployment and integration of that technology. Maintaining the same operational paradigm across those events is more important than ever as upstream innovation cycles shorten and the number of releases per year increase.

4 Run at capacity on-prem. Use public cloud as overflow.

If the goal is providing the best economics in the data center, running your on-premise infrastructure as close to capacity as possible is a natural consequence. Hardware should be chosen to provide the best value for the money invested in it, which may not always lead to the lowest cost in that investment, but will lead to the best economics overall, especially if the goal is to achieve comparable cost structures as public alternatives.

That said, don’t mistake this rule as a call to avoid public cloud. On the contrary, I recommend working with a minimum of two public cloud providers in addition to having a solid on-premise strategy that fulfills the economic parameters of our organization. Having two public cloud partners allows for healthy competition and enforces cloud-neutral automation in your operations, a key attribute of a successful multi-cloud strategy. While having certified administrators for public clouds is good, cloud API agnostic automation is better.

5 Upgrade, don’t backport.

At Ubuntu, we have always endorsed this paradigm, and it continues to be true for open infrastructure. As upstream project support cycles shorten (think of the number of supported releases and maintenance windows for OpenStack and Kubernetes, for example), getting into the habit of upgrading rather than introducing technical debt that is exacerbating the costs of literally every lifecycle management event that comes after its introduction is one of the most important rules to follow. With the right type of automation process in place, upgrading should be predictable, and a solvable problem in a reasonable amount of time. Running your infrastructure as a product, and consuming unmodified upstream code are both contributory factors in that predictability, and without them, this is an almost unmanageable task at any scale.

6 Workload placement matters.

In a way, it’s understandable. The whole point of implementing a private cloud is so that you don’t have to worry about this. However, when you do care, it is typically almost impossible to establish any kind of debugging baseline. Clouds are by nature dynamic, so debugging what happened when some service-level violation occurred needs to take the changing nature of the infrastructure into account. All clouds of reasonable volume have this problem, and most operations teams ignore the necessity of maintaining the correlation between what happens at the bare metal level all the way to what happens at the virtual and container level. The smaller the unit of consumable compute is (think large VMs vs small VMs vs fat containers, vs … you get the idea), the more dynamic the environment typically is, and establishing causation between symptoms and root cause gets exponentially harder the more layers you introduce. Think about workload placement as you onboard tenants and establish the necessary telemetry to capture those events in their context. This will lead to predictive analysis, which ultimately may allow you to introduce AI into your operations (and the larger/more complex your cloud infrastructure is, the more urgent that will be).

7 Plan for transition

I made this point earlier, but it is unrealistic to expect a specific set of hardware to be tied to a specific infrastructure throughout the entirety of its lifespan. This is superbly exemplified in edge use cases. Telco edge specifically will have to address changing workload requirements over the next three years as some workloads will transition into containerized versions of them, others may remain as a VM, and some remain on bare metal. Thus, the nature of the edge and its management requirements will evolve over the next three years. Consequently, it’s not the containerized “end state” that is worth designing for, but the state of transition. Again, the full automation and identical operational paradigm across bare-metal provisioning, virtual machine management, OpenStack and Kubernetes will play a crucial role in achieving this design goal.

8 Security should not be special

Security patches are patches; security operations is simply – operations. Most cloud projects are devised between developers and operations and infrequently do they involve the often separate “security team”. What happens is that after much debate, discussion and creation of a roll-out plan, security teams are confronted with “done deals” to which they mostly react by throwing water on all of those plans. Security is a mindset and an original posture that should be exhibited from the start and continued to be focused on as part of the requirements. Security isn’t special, it’s just as important and critical as any other non-functional requirement that has to be met by the cluster in order to meet the stakeholder’s expectations. So involve security early, often and stay close, lest you’re willing to start over deep into the process.

9 Embrace shiny objects

The whole point of open infrastructure is to foster innovation and to give companies a competitive edge through the acceleration of their next-generation application rollout. Why stand in the way? If your developers want containers, why not? If your developers want serverless, why not? Being part of the solution rather than deriding new technology stacks as “shiny objects” only highlights a lack of confidence in the existing operational paradigm and automation. Sure, it’s fun to engage in some commentary over a beer after work – and that is exactly where it should stay.

10 Be edgy, go Micro!

Shameless plug: I work for Canonical and I care very much about two innovative projects I would like to highlight.

Microstack is a project for OpenStack Edge use cases and currently in beta. It installs a full OpenStack cluster on a single node and will support limited clustering once it goes GA. I’m super excited about it because it elegantly solves the majority of the requirements of small form-factor OpenStack in a clear and concise format, using the Snap application container format. It’s innovative, small, and deserves your attention.

Microk8s is the same for Kubernetes. A single snap package to install on any of the compatible Linux distributions, with a feature-rich add-on system that lets you provision service meshes such as Istio and Linkerd, Knative, Kubeflow and more. Check it out on microk8s.io.

Because both are distributed as snaps, they can be used in an IoT/Devices context as well as in a cloud or data center context. They can be installed with a single command:

$ snap install microstack --beta --classic

$ snap install microk8s --classic

Conclusion

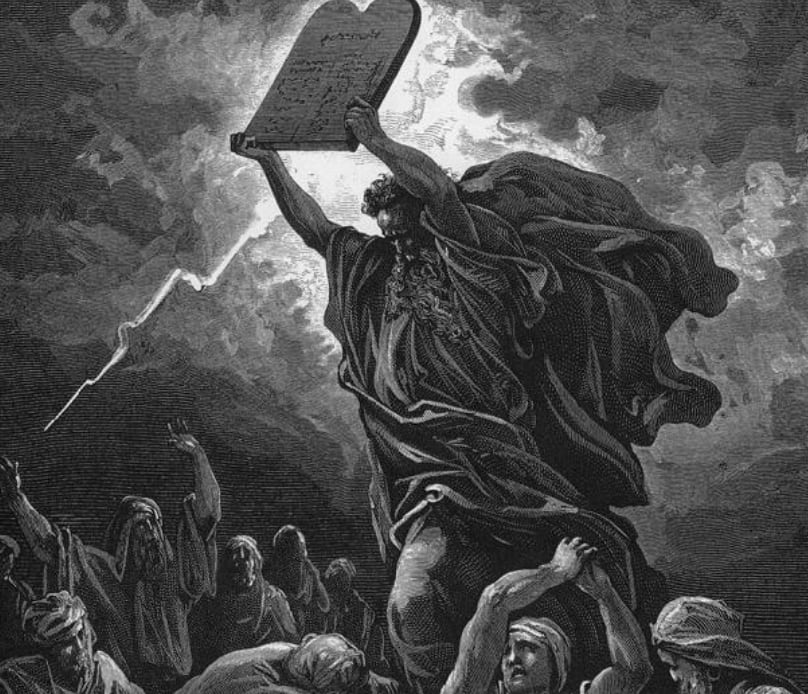

And that’s it – observations over the last ten years in the cloud industry, crystallized as ten new rules. Despite the intro image above, I don’t intend those rules to be taken as ‘commandments’ and I’m certainly no Moses in this regard. I am simply summarizing my observations that I’ve made over the course of the last 10 years as an OpenStack architect and now product manager in this space.